Since launching the Ask Luke feature on this site last year, we've added the ability for the system to respond to questions about product design by citing articles, videos, audio, and PDFs. Now we're introducing the ability to cite the thousands of images I've created over the years and reference them directly in answers.

Significant improvements in AI vision models have given us the ability to quickly and easily describe visual content. I recently outlined how we used this capability to index the content of PDF pages in more depth making individual PDF pages a much better source of content in the Ask Luke corpus.

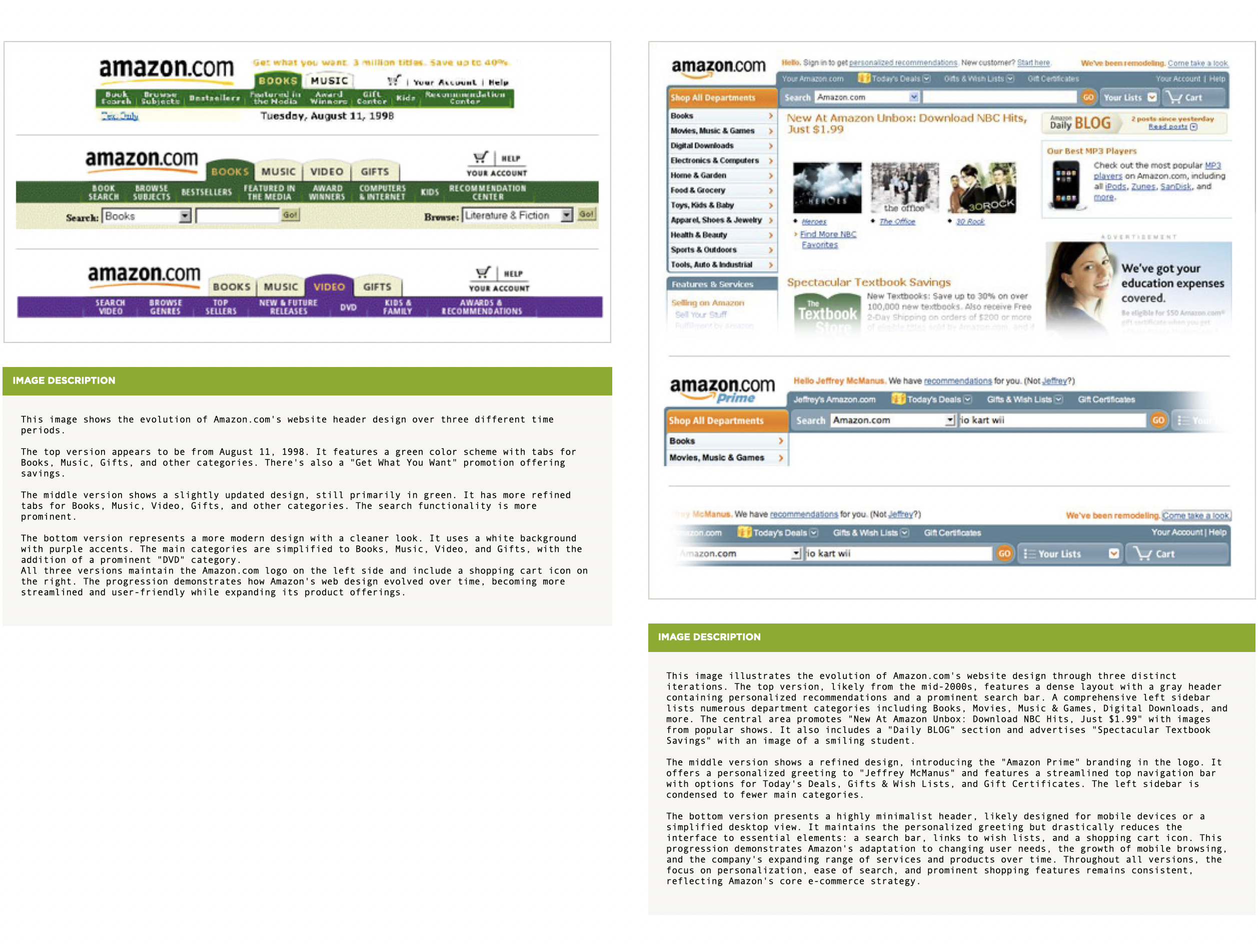

We applied the same process and pipeline to the thousands of images I've created for articles and presentations over the years. Essentially, each image on my Website gets parsed by a vision model and we add the resulting text-based description to the set of content we can use to answer people's design questions. Here's an example of the kinds of descriptions we're creating. As you can see, the descriptions can get pretty detailed when needed.

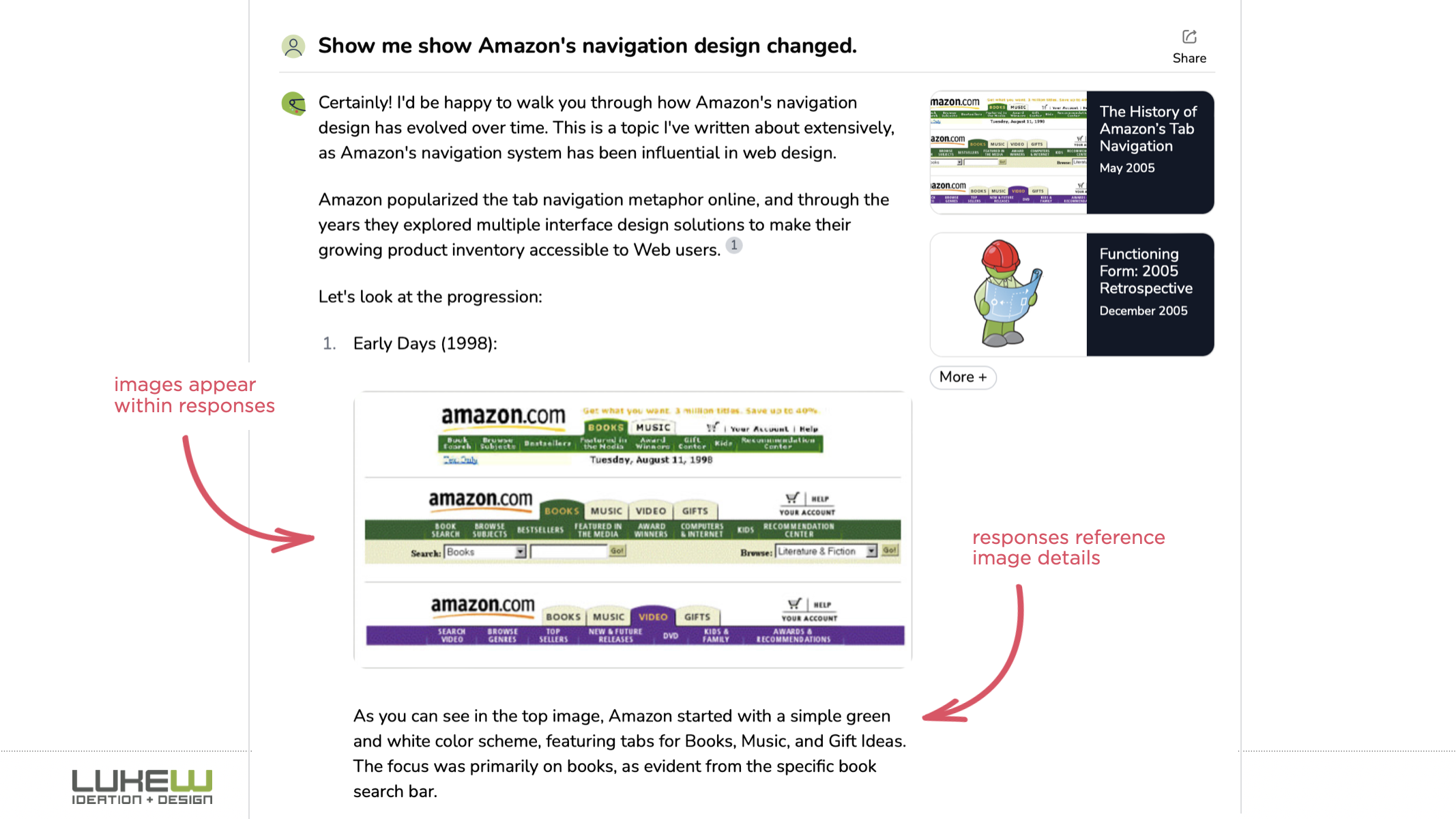

If someone asks a question where an image is a key part of the answer, our replies not only return streaming text and citations but inline images as well. In this question asking about Amazon's design changes over the years, multiple images are included directly in the response.

Not only are images displayed where relevant, the answer refers to them and often refers to the contents of the image. In the same Amazon navigation example, the answer refers to the green and white color scheme of the image in addition to its contents.

Now that we've got citations and images steaming inline in Ask Luke responses, perhaps adding inline videos and audio files queued to relevant timestamps might be next? We're already integrating those in the conversational UI so why not... AI is a hell of a drug.

Further Reading

Additional articles about what I've tried and learned by rethinking the design and development of my Website using large-scale AI models.

- New Ways into Web Content: rethinking how to design software with AI

- Integrated Audio Experiences & Memory: enabling specific content experiences

- Expanding Conversational User Interfaces: extending chat user interfaces

- Integrated Video Experiences: adding video experiences to conversational UI

- Integrated PDF Experiences: unique considerations when adding PDF experiences

- Dynamic Preview Cards: improving how generated answers are shared

- Text Generation Differences: testing the impact of AI new models

- PDF Parsing with Vision Models: using AI vision models to extract PDF contents

- Streaming Citations: citing relevant articles, videos, PDFs, etc. in real-time

- Streaming Inline Images: indexing & displaying relevant images in answers

Acknowledgments

Big thanks to Sidharth Lakshmanan and Sam Breed for the development help.