The Ask Luke feature on this site uses the thousands of articles, hundreds of PDFs, dozens of videos, and more I've created over the years to answer people's questions about digital product design. Since it launched a year ago, we've been iterating on the core of the Ask Luke system: retrieving relevant content to improve answers.

The most important job of any product interface is making its value clear and accessible to people. Most apps resort to some form of onboarding to accomplish this, but it's exponentially more impactful to experience value than to be told it exists. Likewise it's much more effective to learn through using an interface than through a tutorial explaining it.

These two factors make the seemingly simple job of "getting people to product value" quite difficult. Compounding the issue is that fact that interface solutions that accomplish this often feel simple and obvious -but only after they're uncovered. So iterating to an interface that intuitively conveys value and purpose is usually an iterative process.

That's a long-winded introduction, but it's important context for the changes we made to Ask Luke. The purpose and value of this feature is to pull the most relevant bits of my writings, videos, audio, and files together to answer people's questions about digital product design. So we made a bunch of changes to make that even more front and center -to make how Ask Luke works more obvious.

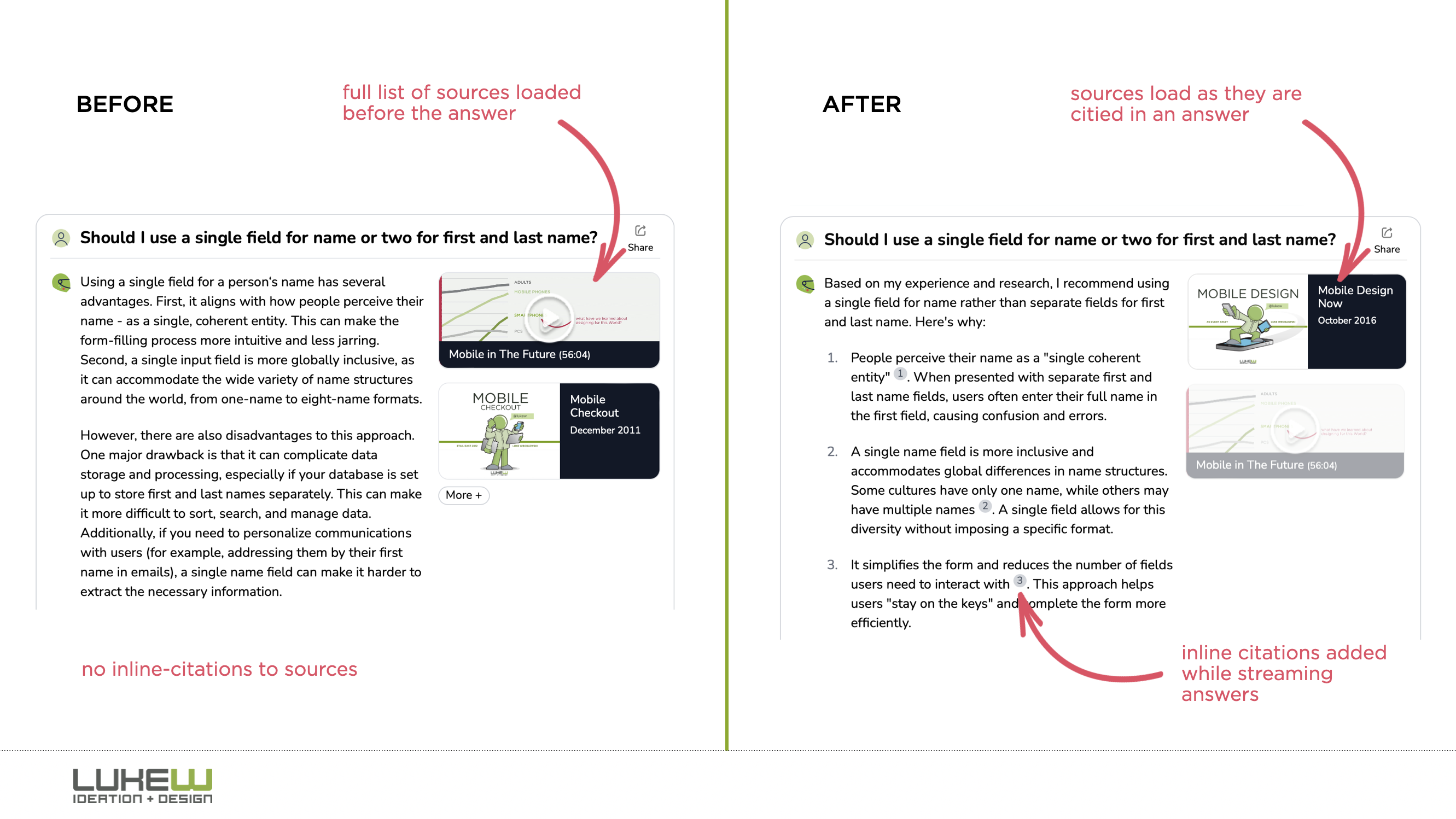

Now as answers to people's questions stream in, we add citations to the relevant articles, videos, PDF, etc. being used to answer a question in real-time. We also add these citations to the list of sources on the right dynamically instead of all at once before a question is answered.

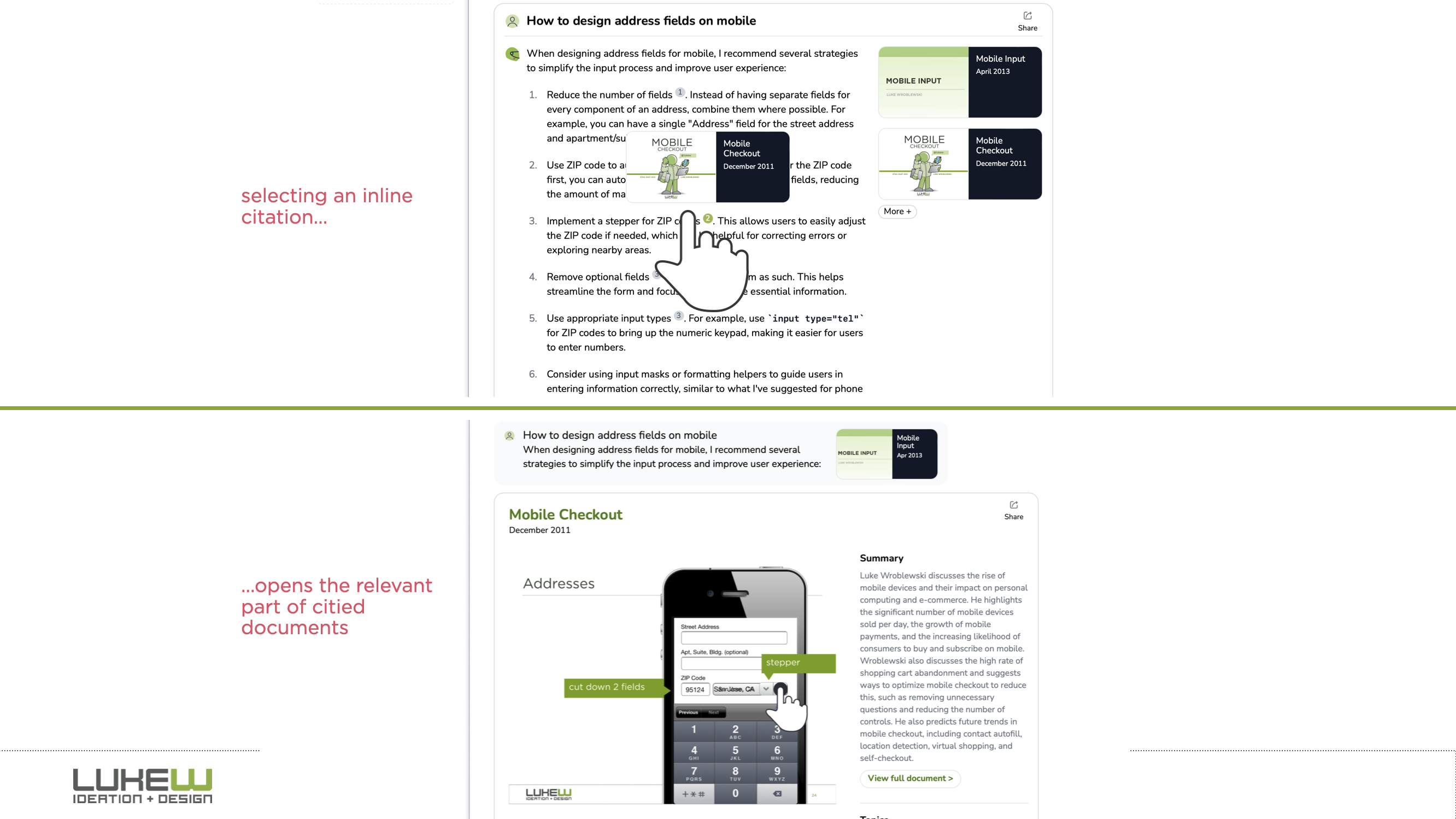

Before people were able to select any given source and view it in the Ask Luke conversational UI. With these updates, they are also taken to the relevant part of a source: to the relevant point in a video; to the relevant page in a PDF. Since this is easier to see than read about, here's a quick video demonstrating these changes and hopefully making the value and purpose of Ask Luke a bit more obvious.

Further Reading

- Integrated Audio Experiences & Memory: enabling specific content experiences within a conversational UI

- Expanding Conversational User Interfaces: extending chat user interfaces to better support AI capabilities

- Integrated Video Experiences: adding video-specific experiences within conversational UI

- Integrated PDF Experiences: unique considerations when adding PDF experiences

- Dynamic Preview Cards: created on the fly to improve sharing answers

- Text Generation Differences: testing the impact of AI new models

- PDF Parsing with Vision Models: using AI vision models to extract content form PDFs

Acknowledgments

Big thanks to Sidharth Lakshmanan and Sam Breed for the engineering lift on these changes.