As artificial intelligence models extend what's possible with human computer interactions, our user interfaces need to adapt to support them. Currently many large language model (LLM) interactions are served up within "chat UI" patterns. So here's some ways these conversational interfaces could expand their utility.

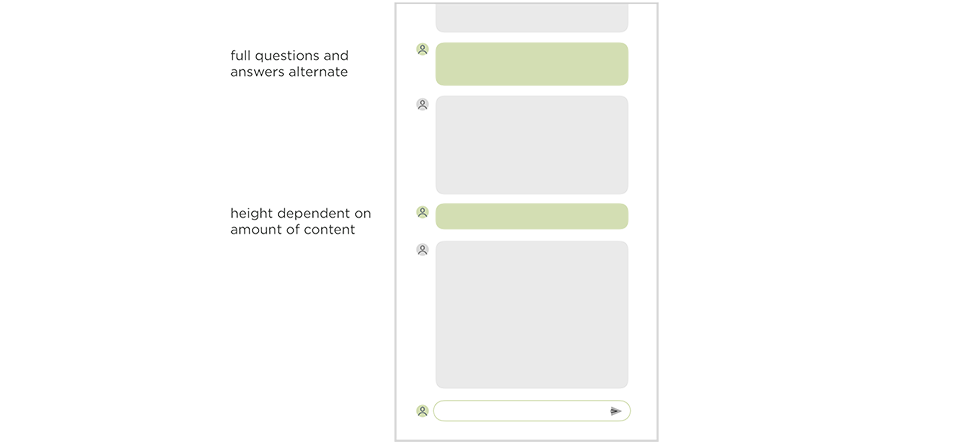

To start, what is a chat UI? Well we all spend hours using them in our favorite messaging apps so most people are familiar with the pattern. But here we go... each participant's contribution to a conversation is enclosed in some visual element (a bubble, a box). Contributions are listed in the order they are made (chronologically bottom up or top down). And the size of each contribution determines the amount of vertical space it takes up on screen.

To illustrate this, here's a very simple chat interface with two participants, newest contributions at the bottom, ability to reply below. Pretty familiar right? This, of course, is a big advantage: we all use these kinds of interfaces daily so we can jump right in and start interacting.

Collapse & Expand

When each person's contribution to a conversation is relatively short (like when we are one-thumb typing on our phones), this pattern can work well. But what happens when we're asking relatively short questions and getting long-form detailed answers instead? -a pretty common situation when interacting with large language model (LLM) powered tools like Ask LukeW.

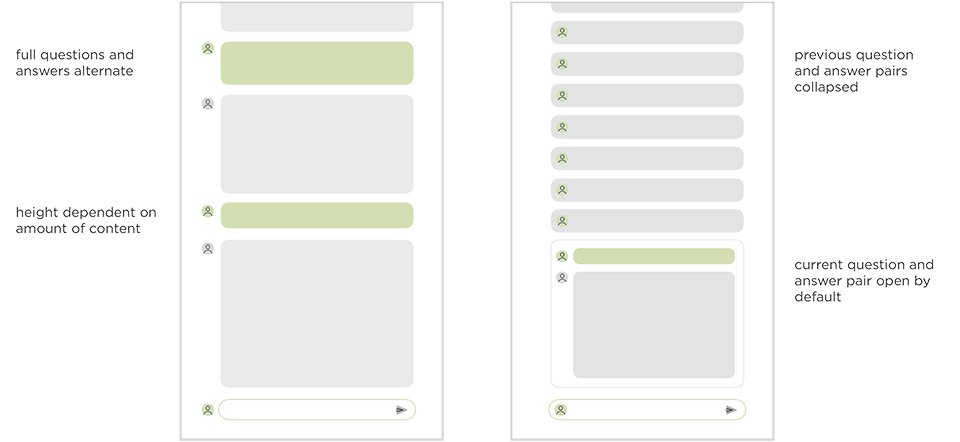

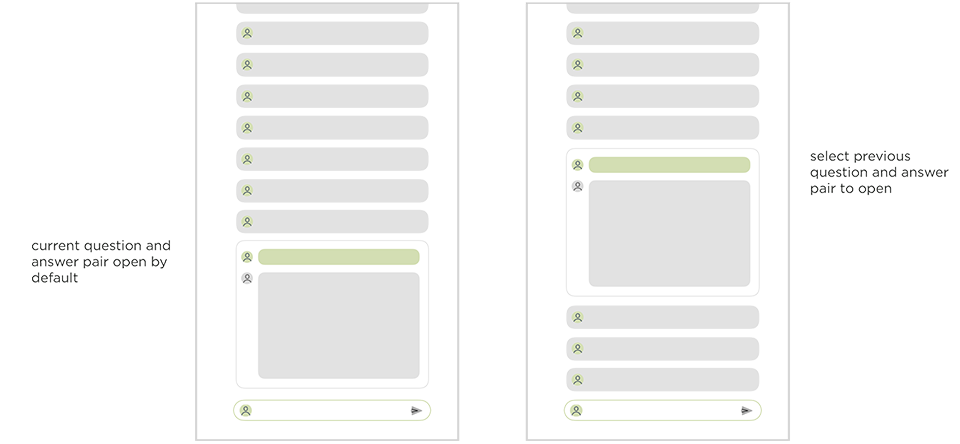

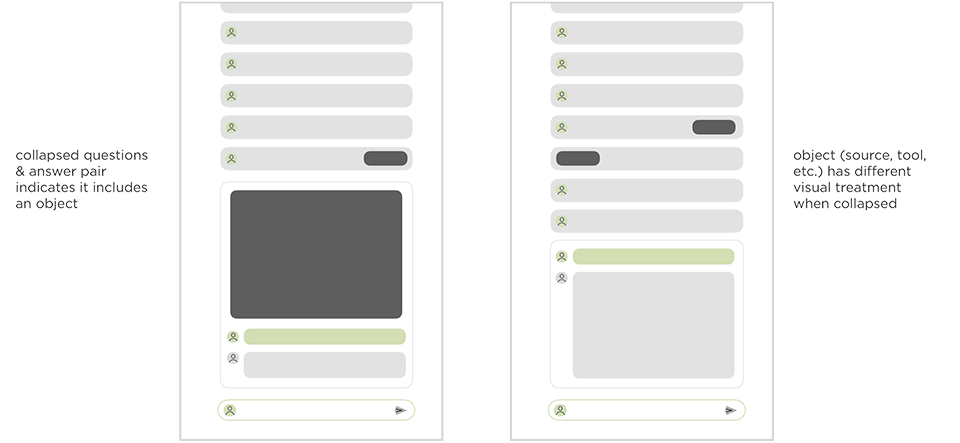

In this case, we might want to visually group question and answer pairs (I ask the system, it responds) and even condense earlier question and answer pairs to make them more easily scannable. This allows the current question and answer to clearly take priority in the interface. You can see the difference between this approach and the earlier, more common chat UI pattern below.

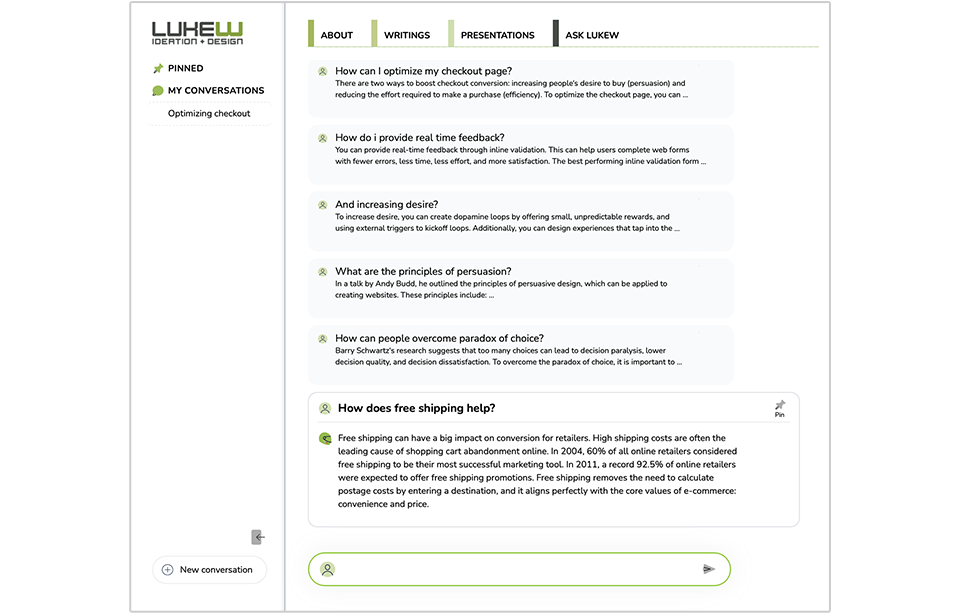

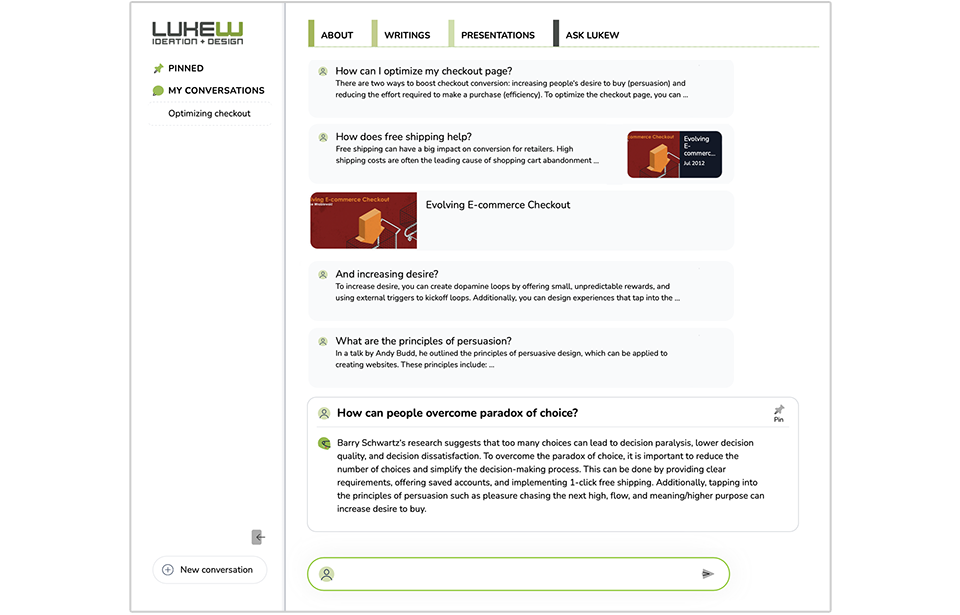

Here's how this design shows up on Ask LukeW. The previous question and answer pairs are collapsed and therefore the same size, making it easier to focus on the content within and pick out relevant messages from the list when needed.

If you want to expand the content of an earlier question and answer pair, just tap on it to see its contents and the other messages collapse automatically. (Maybe there's scenarios where having multiple messages open is useful too?)

Integrated Actions

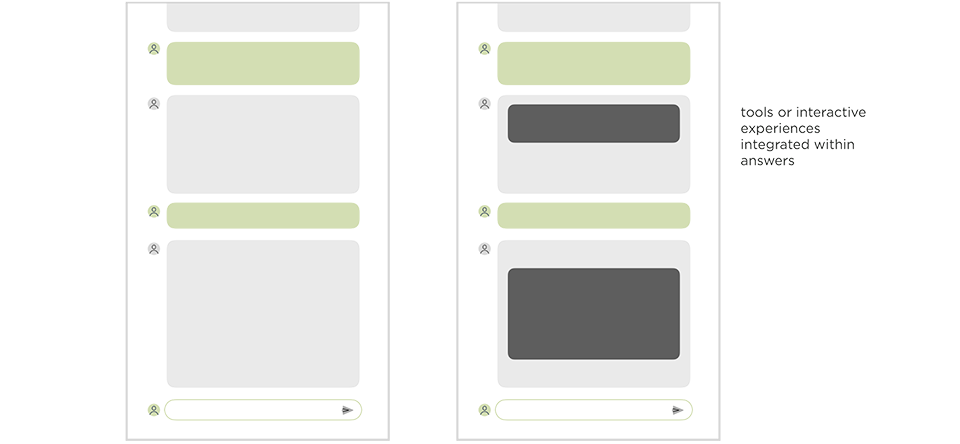

When chatting on our phones and computers, we're not just sending text back and forth but also images, money, games, and more. These mostly show up as another message in a conversation but can they also be embedded within messages as well.

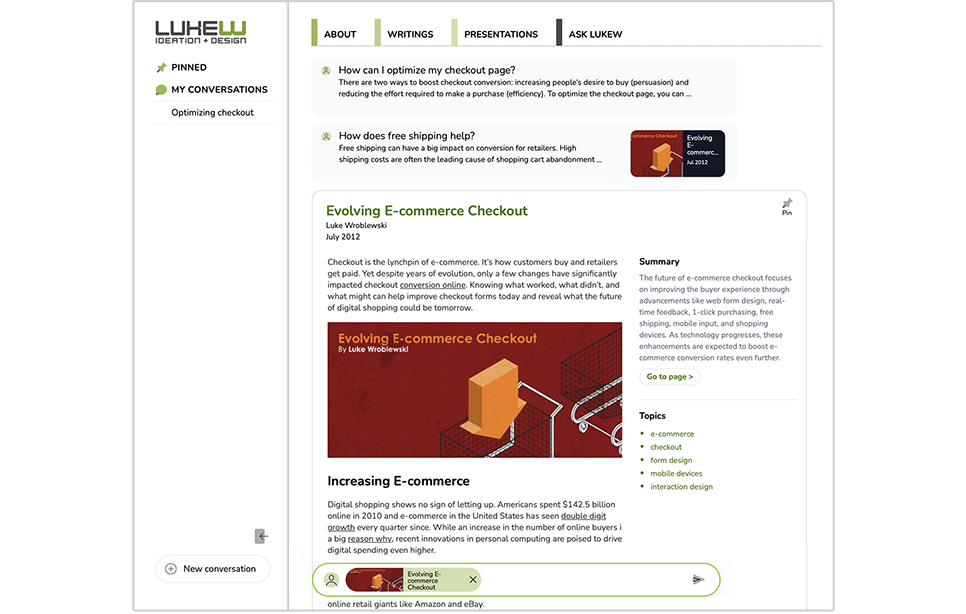

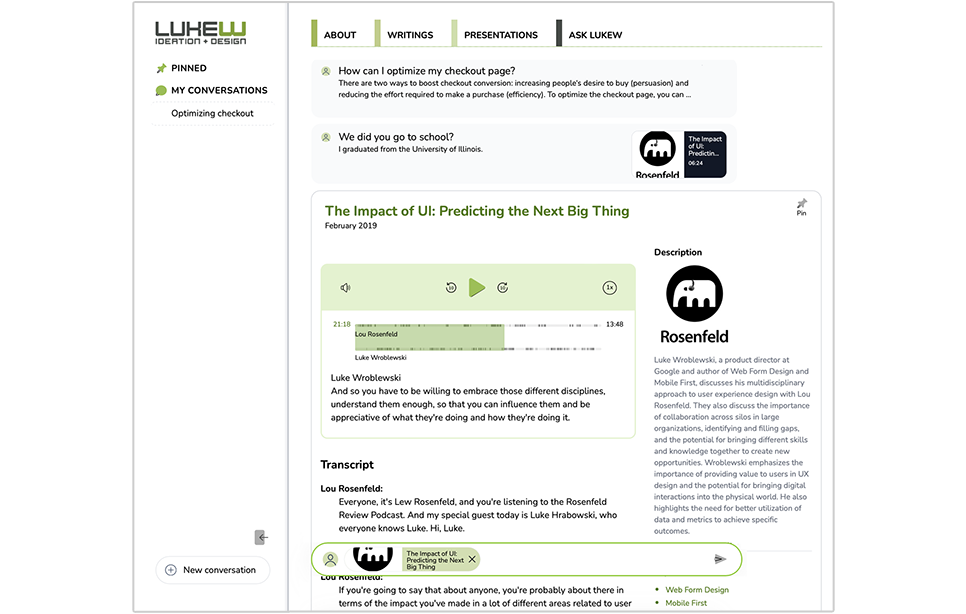

As large language model (LLM) powered tools increase their abilities, their responses to our questions can likewise include more than just text: an image, a video, a chart, or even an application. To account for this on Ask LukeW we added a consistent way to present these objects within answers and allow people to select each to open or interact with it.

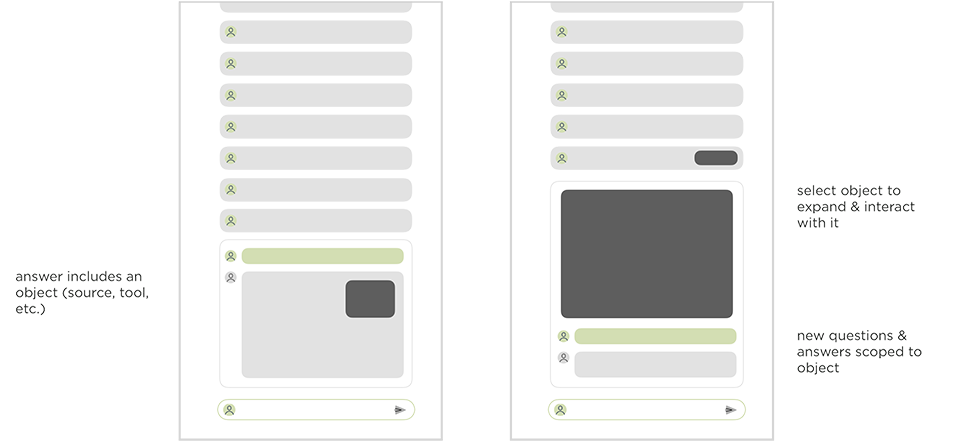

Here's how this looks for any articles or audio files that come back as sources for generated replies. Selecting the audio or article object expands it. This expanded view has content-specific tools like a player, scrubber, and transcript for audio files and the ability to ask questions only within this file.

After you're done listening to the audio, reading the article, interrogating it, etc, it will collapse just like all the prior question and answer pairs with one exception. Object experiences get a different visual representation in the conversation list. When an answer contains an object, there's a visual indication on the right in the collapsed view. When it is an object experience (a player, reader, app), a more prominent visual indication slows up on the left. Once again this allows you to easily scan for previously seen or used information within the full conversation thread.

Expanding, collapsing, object experiences... seems like there's a lot going on here. But fundamentally these are just a couple modifications to conversational interface patterns we use all the time. So the adjustment when using them in tools like Ask LukeW is small.

Whether or not all these patterns apply to other tools powered by large language models... we'll see soon enough. Because as far as I can tell, every company is trying to add AI to their products right now. In doing so, they may encounter similar constraints to those that led us to these user interface patterns.