Despite all the mind-blowing advances in AI models over the past few years, they still face a massive obstacle to achieving their potential: people don't know what AI can do nor how to guide it. One of the ways we've been addressing this is by having LLMs rewrite people's prompts.

Prompt Writing & Editing

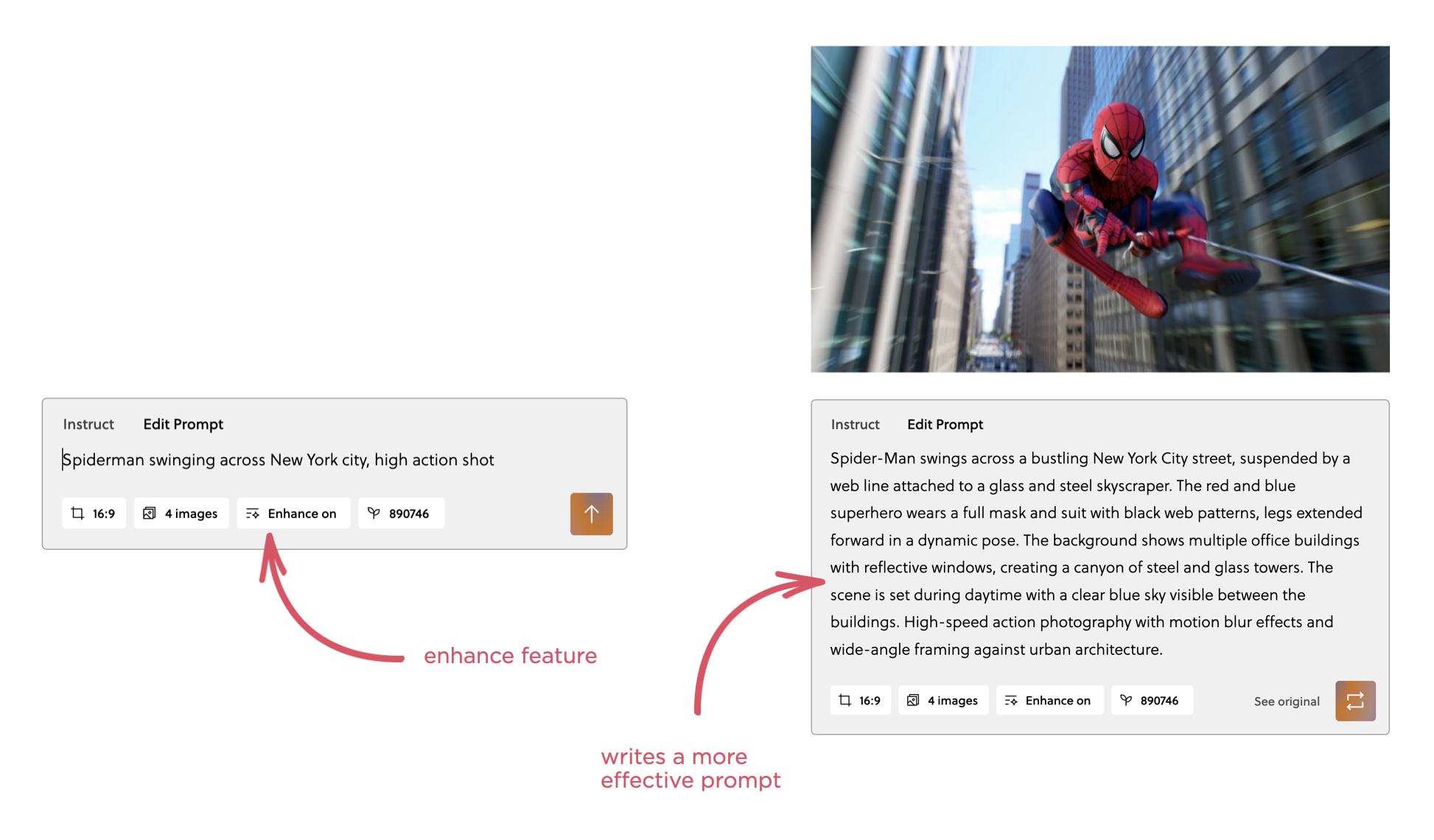

The preview release of Reve's (our AI for creative tooling company) text to image model helps people get better image generation results by re-writing their prompts in several ways.

Reve's enhance feature (on by default) takes someone's image prompt and re-writes it in a way that optimizes for a better result but also teaches people about the image model's capabilities. Reve is especially strong at adhering to very detailed prompts but many people's initial instructions are short and vague. To get to a better result, the enhance feature drafts a much comprehensive prompt which not only makes Reve's strengths clear but also teaches people how to get the most of the model.

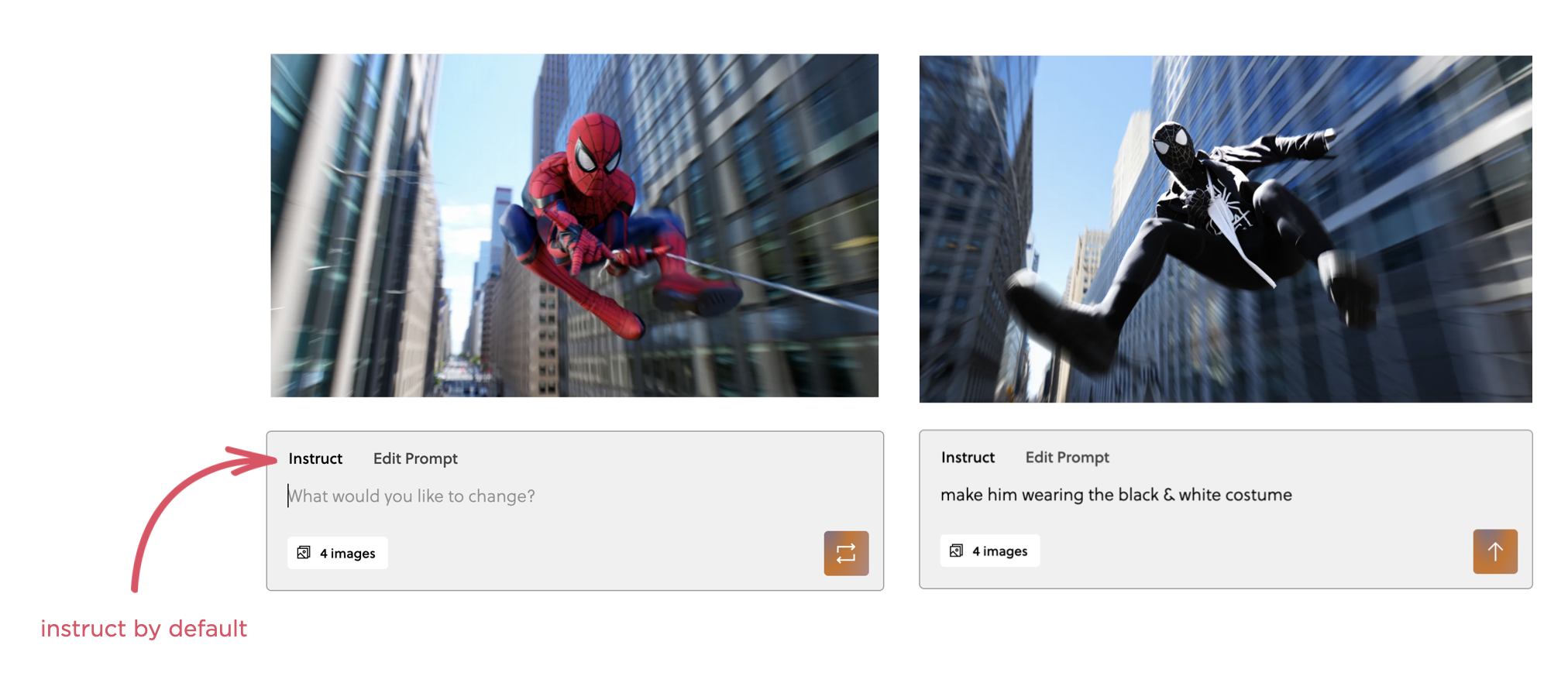

The enhance feature also harmonizes prompts when someone make changes. For instance, if the prompt includes several mentions of the main subject, like a horse, and you change one of them to a cow, the enhance feature will make sure to harmonize all the "horse" mentions to "cow" for you.

But aren't these long prompts too complicated for most people to edit? This is why the default mode in Reve is instruct and prompt editing is one click away. Through natural language instructions, people can edit any image they create without having to dig through a wall of prompt text.

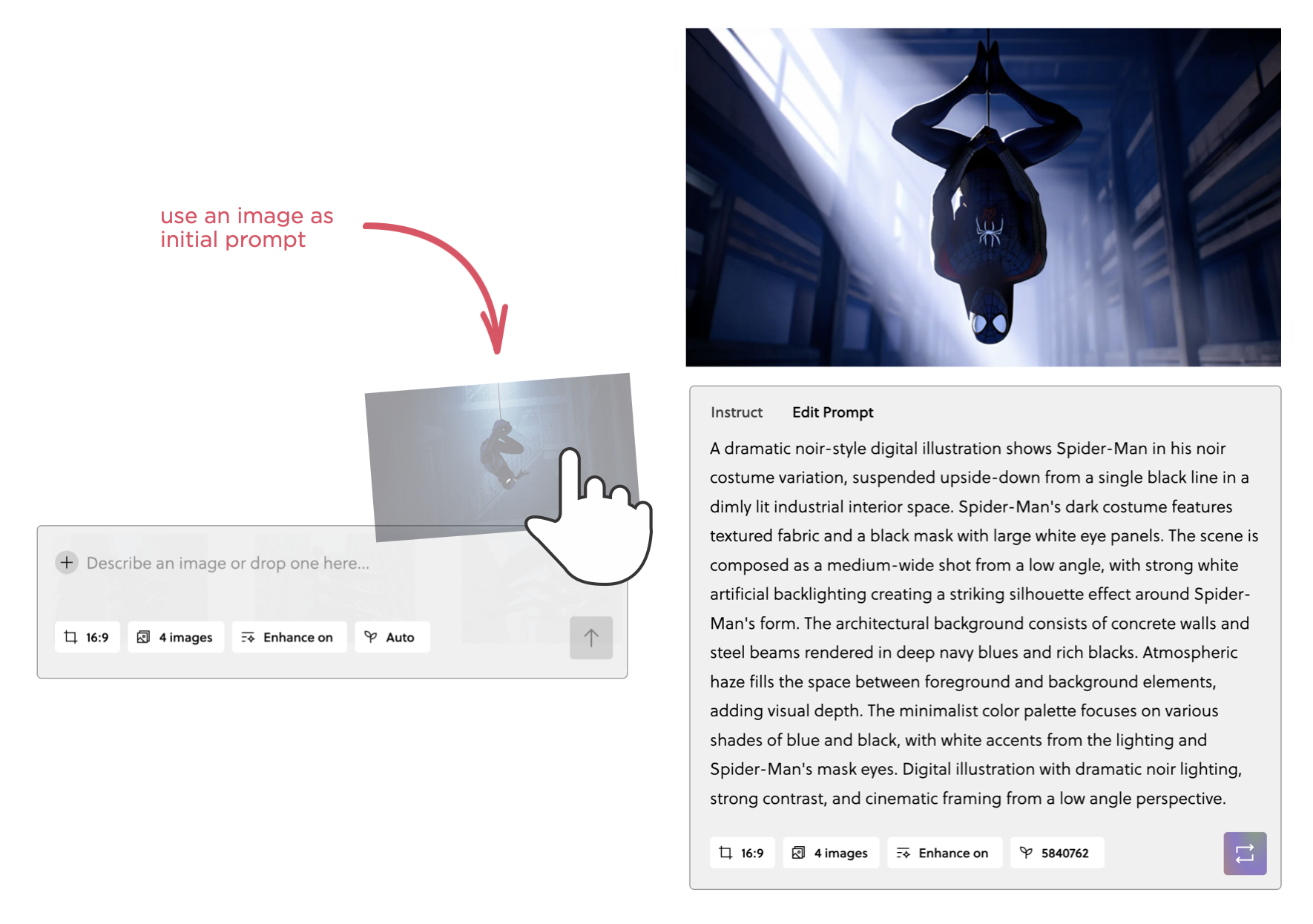

Even better, though, is starting an image generation with an image. In this approach you simply upload an image and Reve writes a comprehensive prompt for it. From there you can either use the instruct mode to make changes or dive into the full prompt to make edits.

Plan Creation & Tool Use

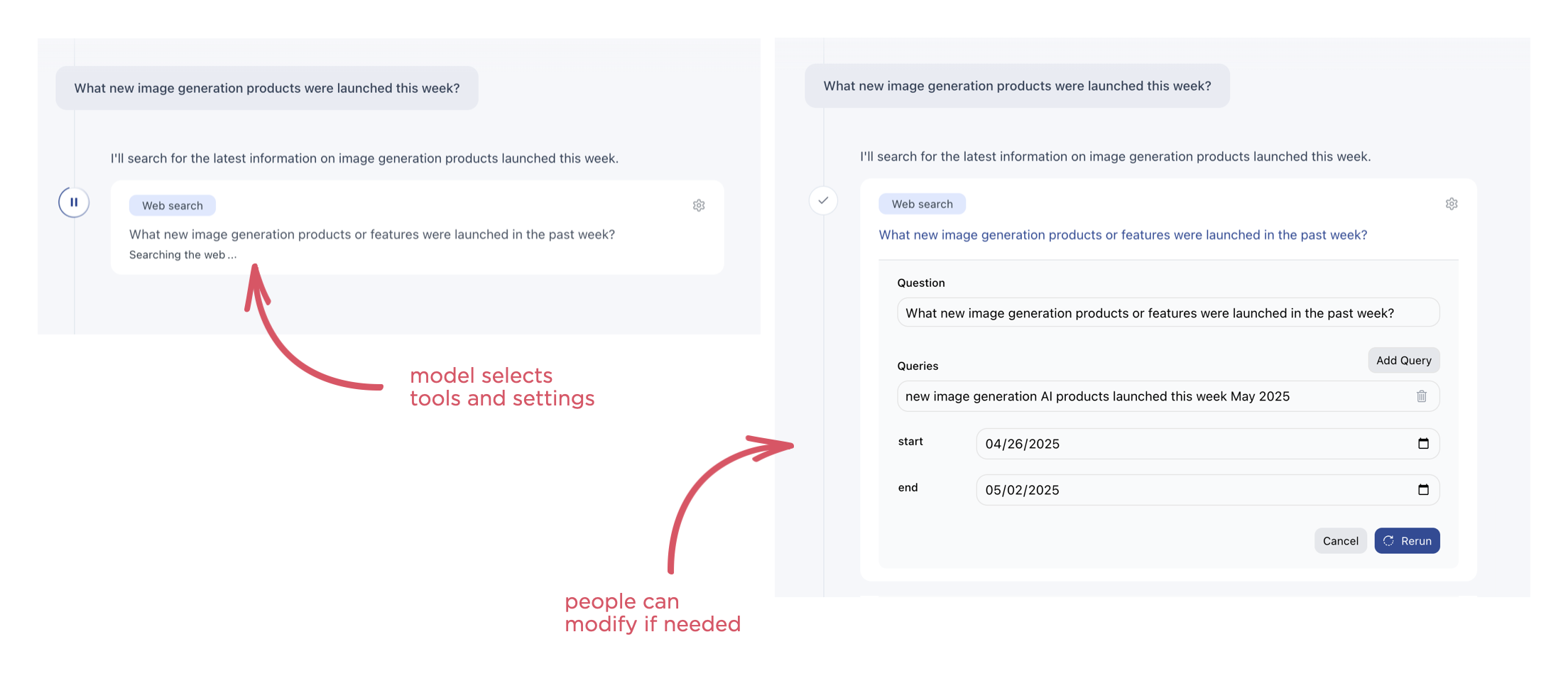

As if it wasn't hard enough to prompt an AI model to do what you want, things get even harder with agentic interfaces. When AI models can make use of tools to get things done in addition to using their own built-in capabilities, people now have to know not only what AI models can do but what the tools they have access to can do as well.

In response to an instruction in Bench (our AI for knowledge work company), the system uses an AI model to plan an appropriate set of actions in response. This plan includes not only the tools (search, browse, fact check, create PowerPoint, etc.) that make the most sense to complete the task but also their settings. Since people don't know what tools Bench can use nor what parameters the tools accept, once again an AI model rewrites people's prompts for them into something much more effective.

For instance, when using the search tool, Bench will not only decide on and execute the most relevant search queries but also set parameters like date range or site-specific constraints. In most cases, people don't need to worry about these parameters. In fact, we put them all behind a little settings icon so people can focus on the results of their task and let Bench do the thinking. But in cases where people want to make modifications to the choices Bench made, they can.

Behind the scenes in Bench, the system not only re-writes people's instructions to pick and make effective use of tools but it also decides which AI models to call and when. How much of that should be exposed to people so they can both modify it if needed and understand how things work has been a topic of debate. There's clearly a tradeoff with doing everything for people automatically and giving them more explicit (but more complicated) controls.

At a high level, though, AI models are much better at writing prompts for AI models than most people are. So the approach we've continued to take is letting the AI models rewrite and optimize people's initial prompts for the best possible outcome.