As people continue to go online using an ever increasing diversity of devices, responsive Web design has helped teams build amazing sites and apps that adapt their designs to smartphones, desktops, and everything in between. But many of these solutions are relying too much on a single factor to make important design decisions: screen size.

What’s Wrong With Screen Size?

It's not that adapting an interface to different screen sizes is a bad thing. Quite the opposite. It’s so important that key metrics like conversion and engagement usually increase substantially when Web sites adjust themselves to fit comfortably within available screen space. For proof, just look at how mobile conversion rates increase significantly more in responsive redesigns than PC conversions do.

So if adapting to different screen sizes can have that kind of positive impact for a business, what’s the risk? As the kinds of devices people use to get online continue to diversify, relying on screen size alone paints an increasingly incomplete picture of how a Web experience could/should adapt to meet people’s needs. Screen size can also lead to bad decision-making when used as a proxy for determining:

- If a browser is running on a mobile device or not

- If Network connections are good (fast) or bad (slow)

- If a device supports touch, call-making, or other capabilities

There’s still no relationship between screen size and bandwidth. Instead, we should ensure our work’s as light as possible *for everyone*.

— Responsive Design (@RWD) October 28, 2013

None of these can actually be accurately inferred from screen size alone but they are comfortable assumptions that make managing device diversity substantially easier. The harsh truth however, is that output (screen size and resolution) is only one third of the equation -at best. Equally important to determining how to adapt an interface are input capabilities and user posture, which sadly screen size doesn’t tell us anything about.

Let me illustrate with a few specific examples.

Screen Size Limits

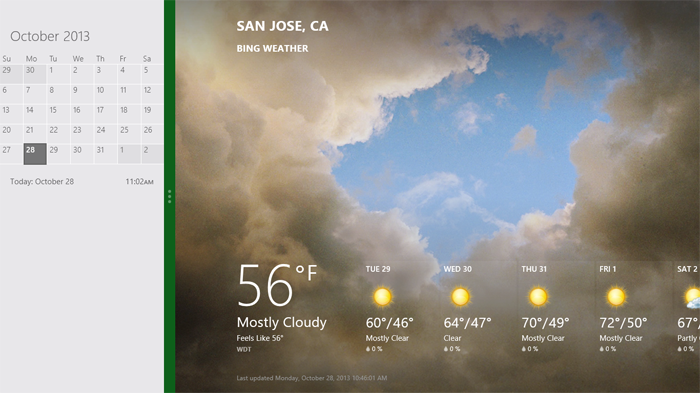

On tablets, PCS, and TVs, Microsoft’s Windows 8 platform allows any app, including the Web browser, to be “snapped” to the side of a screen thereby letting people interact with it while using another application in the primary view. As an example, the Windows 8 calendar application can be snapped alongside the weather app when making your daily plans.

Notice though, that the default view of the calendar application on Windows Phone 8 is quite different than the snapped view of the same app on a tablet, PC, or TV. They are both using the same amount of screen width (in relative pixels), but the mobile interface starts with a daily agenda instead of a small month view by default. The controls are also adjusted to the mobile form factor as you can see in the image below.

We can debate about why these differences exist and if they should or not but the bottom line is there’s more than screen size being taken into account in these application designs.

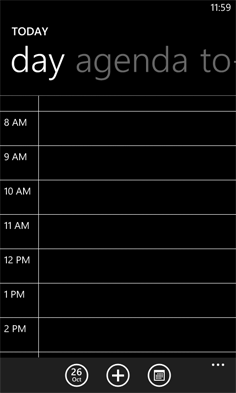

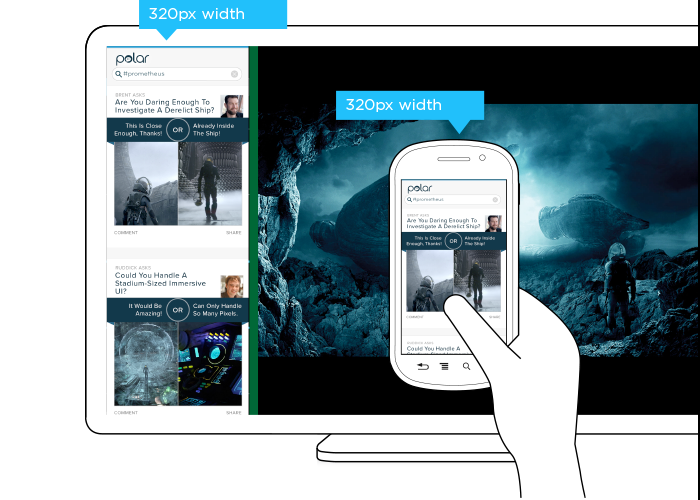

This simple example illustrates the challenge for Web designers. On Windows Phone devices, Internet Explorer uses 320 pixels for its device-width (the width it renders content at). On Windows 8 tablets, PCs, and TVs, snap mode uses the same 320 pixel device-width to lay out Web pages docked alongside other apps.

So with a responsive Web design, people get the same interface on a smartphone that they get in snap mode on a TV screen due to the same device-width (320 pixels). You can see this illustrated in the image below.

But should the interface be the same? A TV is usually viewed from about 10 feet away, while the average smartphone viewing distance is about 12 inches. This has an obvious impact on legibility for things like font and image sizes but it also affects other design elements like contrast. So a user’s posture (in this case viewing distance) should be taken into account when designing for different devices.

The input capabilities of a TV (D-pad) can differ wildly from a those of a mobile device (touch) or in some cases be the same (voice). Designing a simple list interface for d-pads requires a different approach than a creating a similar listing for use with touch gestures. So available input types should also be considered in a multi-device design.

When you take user posture and input capabilities into account when designing, an interface can change in big or small ways. For instance, contrast the design below for Windows 8 snap mode on a TV compared to a mobile version of the same feature.

While the screen size (320 pixel device-width) has stayed consistent, the interface has not. Larger fonts, a simplified list view, inverted colors, and a lot more have changed in order to support a different user posture (10 ft away vs. 12 inches), and different input types (d-pad vs. touch). As you can see, screen size doesn’t give us a complete picture of what we need to know to design an appropriate interface.

Before you dismiss this as an isolated use case on Windows 8 devices, note that Android smartphones and tablets also offer the ability to interact with multiple applications side by side and Android-powered TVs won’t be far behind. In fact, we’ve already got Android eyepieces like Google Glass that pose similar challenges.

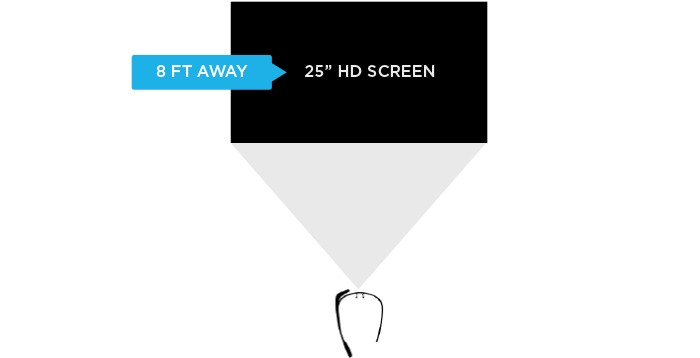

Google Glass allows you to view applications and Web pages using a display that projects information just above your line of sight. The official specs describe the Glass display as a “25 inch HD screen viewed from 8 feet away.” So right up front, viewing distance matters.

Like most mobile Web browsers, Glass uses a dynamic viewport to resize Web pages for its screen. On Glass the default viewport size is set to 960 pixels and pages are scaled down accordingly. So if someone is viewing the Yahoo! Finance site, it displays like this in the Glass browser (below). Essentially, it is shrunk down to fit.

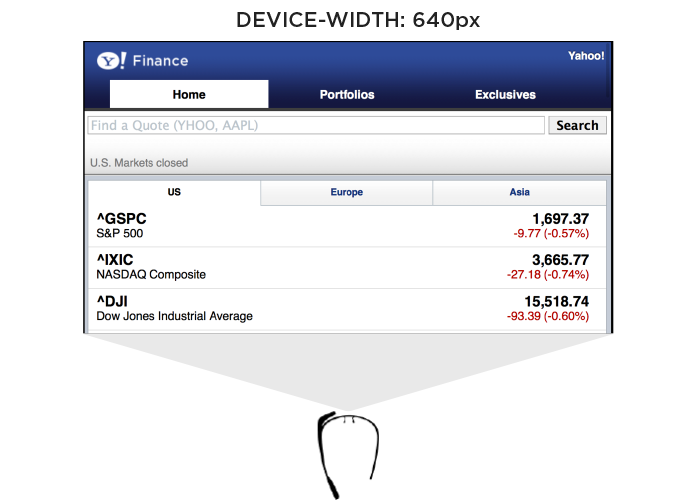

The Web browser on Glass also allows pages built responsively to adapt to a more suitable device-width. In this case, 640 pixels. So a Web page designed to work across a wide range of screen sizes would render differently on Glass. Given that 600 pixels is a common device-width for 7 inch tablets, the page you’d see on Glass would look more like the following -adapted for a smaller viewport size.

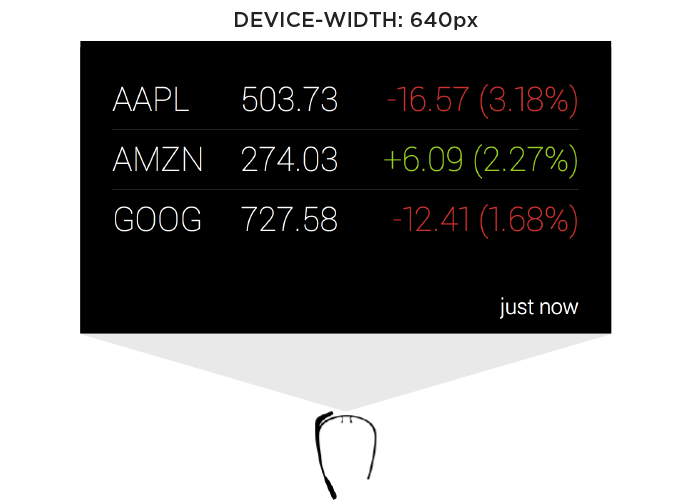

In addition to the Web browser, Google Glass also includes a number of “glassware” applications built with the same Web technologies used to create Web pages. One of these apps provides access to stock price changes -very similar to what you see displayed prominently on the Yahoo! Finance site. However, the presentation of this information is very different. As you can see in the image below it’s been designed as if you are viewing a 25” screen from 8 feet away. This design is much more suited to a wall-sized display than a small tablet screen.

This Glassware interface is also designed to make scrolling through information using the touchpad on the side of Google Glass (which comfortably supports sweeping left/right and up/down gestures) fast and easy.

So again user posture and input capabilities inform how to design for a specific device. Screen size alone doesn’t tell us enough.

Supporting Everything

In order for an interface to adapt appropriately to different output, input, and user posture, we need to know what combination of the three we’re are dealing with at any given time. On the Web that’s been notoriously difficult. We can’t tell TVs from smartphones or what devices support touch without relying on some level of user agent detection, which is often looked at dubiously.

Because of this, Web developers and designers have smartly decided to simply embrace all forms of input: touch, mouse, and keyboard for starters. While this approach certainly acknowledges the uncertainty of the Web, I wonder how sustainable it is when voice, 3D gestures, biometrics, device motion, and more are factored in. Can we really support all available input types in a single Web interface?

A similar approach to user posture is increasingly common. That is, an interface can simply ask people if they want a lean-back 10 foot experience, a data dense 2 foot experience, or something more suited for small portable screens. This makes user posture something that is declared by people rather than inferred by device. Once again, this kind of “support everything” thinking embraces the diversity of the Web whole-heartedly. However it puts the burden on each and every user to understand different modes, when they are appropriate, and change things accordingly. (Personally I feel we should be able to provide an optimal experience without requiring people to work for it.)

Ultimately trying to cover all input types and all user postures in a single interface is a daunting challenge. It’s hard enough to cover all the screen sizes and resolutions out there. Couple that with the fact that an interface that tries to be all things to all devices might ultimately not do a good job for any situation. So while I embrace supporting the diversity of the Web as much as possible, I worry there’s a limit to the practicality of this approach long-term as the amount of possible inputs, outputs, and user postures continues to grow.

Don’t Assume Too Much

These examples are intended to convey one important point: don’t assume screen adaptation is a complete answer for multi-device Web design. Responsive Web design has given us a powerful toolset for managing a critical part of the multi-device world. But assuming too much based on screen size can ultimately paint you into a corner.

It’s not that adapting to screen size doesn’t matter, as I pointed out numerous times, it really does. But if you put too much stock in screen size or don’t consider other factors, you may end up with incomplete or frankly inappropriate solutions. How people interact with the Web across screens continues to evolve rapidly and our multi-device design methods need to be robust enough to evolve alongside.