First Person user interfaces allow people to digitally interact with the real world as they are currently experiencing it. Through a set of "always on" sensors, these applications layer information on people's immediate view of the world and turn the objects and people around them into interactive elements.

Simply place a sensor-rich computing device in a specific location, near a specific object or person, and automatically get relevant output based on who you are, where you are, and who or what is near you. This enables digital interactions with the real world that help people: navigate the space around them; augment their immediate surroundings; and interact with nearby objects, locations, or people.

Through handheld and embedded GPS units, we’ve had digital tools that help people navigate the space around them for quite a while. But our interactions with these interfaces were primarily managed from above. In other words, we saw our current position in the World as a point an a map. More recently, GPS units began including three dimensional representations of spatial navigation became more widespread. This meant navigation cues designed from our current perspective.

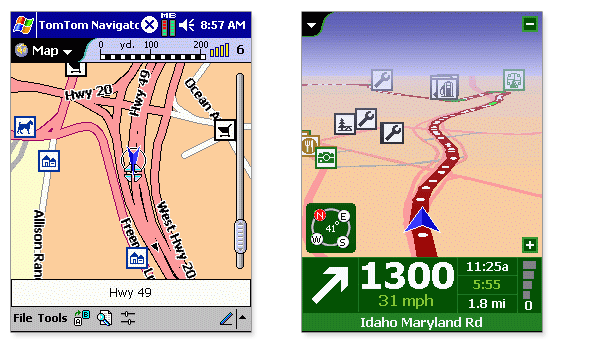

Consider the difference between the two screens from the TomTom navigation system shown above. The screen on the left provides a two-dimensional, overhead view of a driver’s current position and route. The screen on the right provides the same information but from a first person perspective. This first person user interface mirrors your perspective of the world, which hopefully allows you to more easily follow a route. When people are in motion, first person interfaces can help them orient quickly and stay on track without having to translate two-dimensional information to the real world.

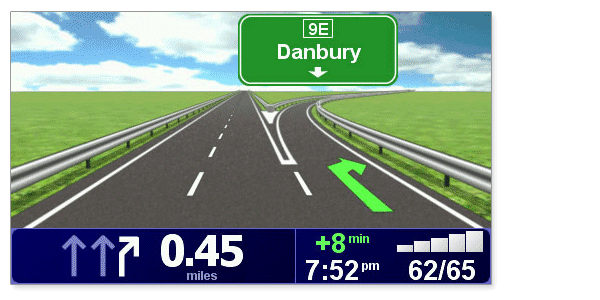

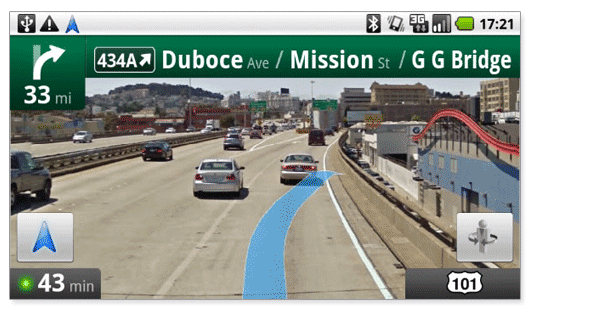

TomTom’s latest software version goes even further toward mirroring our perspective of the world by using colors and graphics that more accurately match real surroundings. But why re-draw the world when you can provide navigation information directly on it? Google Maps Navigation uses actual satellite and street view images of the World around you and overlays directions and routes on them.

In lots of cases, there are great reasons for software not to directly mimic reality. Not doing so allows us to create interfaces that enable people to be more productive, communicate in new ways, or manage an increasing amount of information. In other words, to do things we can’t otherwise do in real life.

But sometimes, it makes sense to think of the real world as an interface. To design interactions that make use of how people actually see the World. In the case of navigating physical space It may make sense to take advantage of first person user interfaces.