To date, most digital image and video editing tools have been canvas-centric. Advances in artificial intelligence, however, have started a shift toward more object-centric workflows. Here's several examples of this transition.

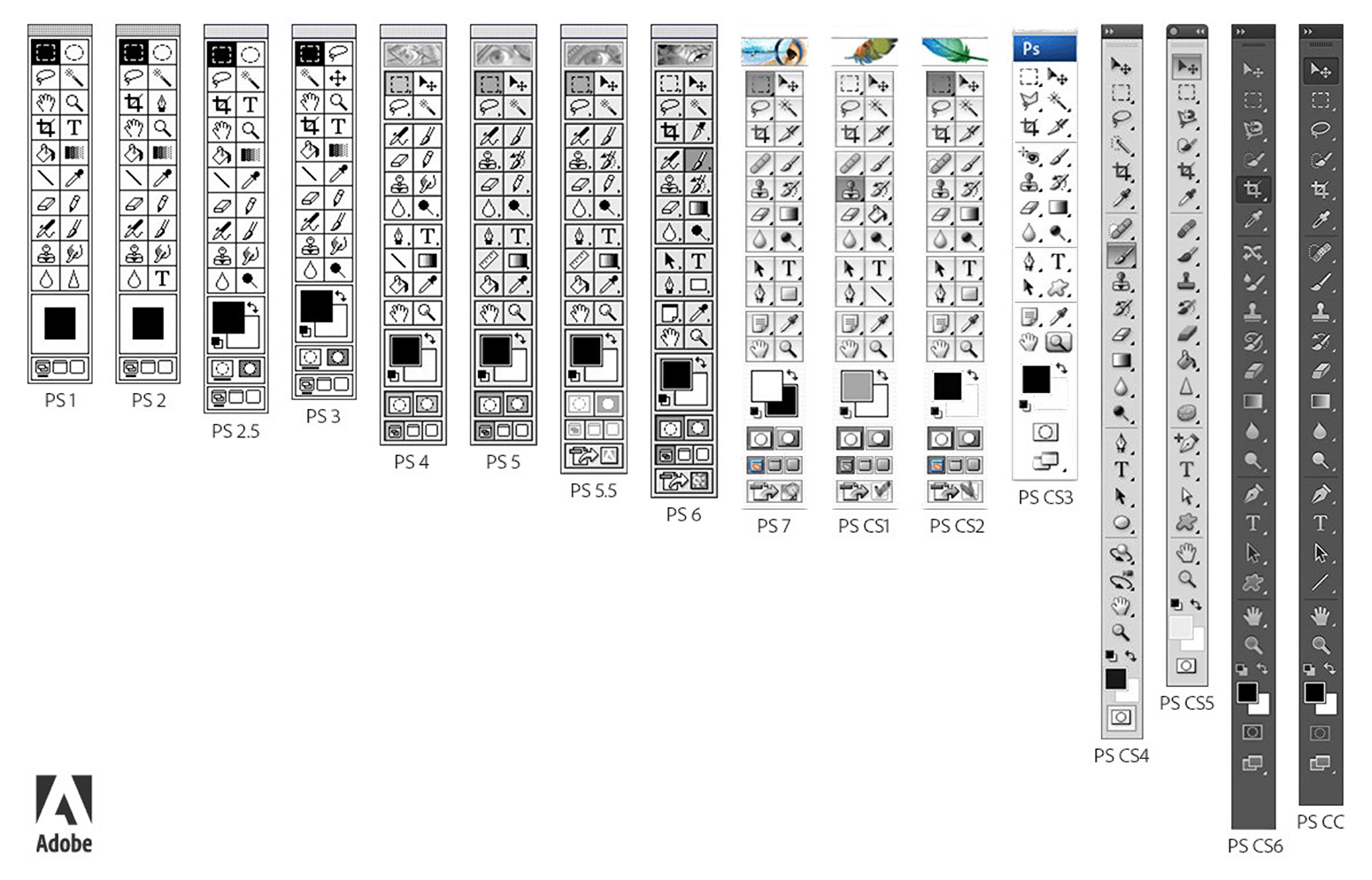

In canvas-centric image or video editing software, you're adding, removing, or changing pixels (or vectors) that represent objects. Photoshop, used by 90% of the world's creative professionals, is a great example. Its ever increasing set of tools is focused on pixel manipulation. A semantic understanding of the subject matter you're editing isn't really core to Photoshop's interface model.

In an object-centric workflow, on the other hand, every object in an image or video is identified and you can perform editing operations by changing the subject of each. For instance in this example from RunwayML, you can change the character in a video by just dragging one object onto another.

Object-centric workflows allow entities to be be easily selected and manipulated semantically ("make it a sunny day") or through direct manipulation ("move this bench here") as seen in this video from Google Photos' upcoming Magic Editor feature.

Similarly, the Drag Your GAN project enables people to simply drag parts of objects to manipulate them. No need to worry about pixels. That happens behind the scenes.

Object-centric features, of course, can make their way into canvas-centric workflows. Photoshop has been quick to adopt several (below) and it's likely both pixel and object manipulation tools are needed for a large set of image editing tasks.

Despite that, it's interesting to think about how a truly object-centric user interface for editing might work if it was designed from the ground up with today's capabilities: object manipulation as the high-order bit, pixel editing as a secondary level of refinement.